Click here to go see the bonus panel!

Hovertext:

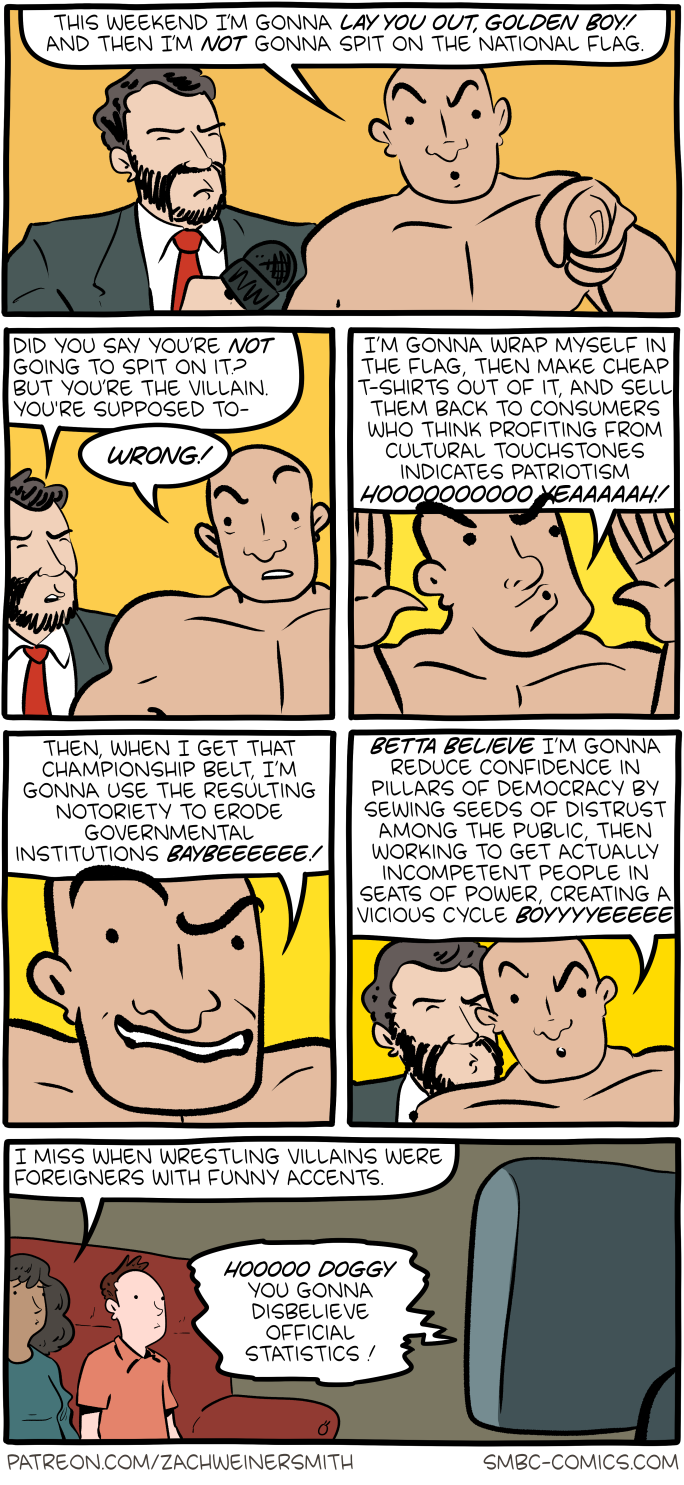

Weirdly, his authentic self is three teenagers in a trenchcoat.

Today's News:

Hovertext:

Weirdly, his authentic self is three teenagers in a trenchcoat.

This is, if I might be forgiven from invoking such anachronistic concepts, highly illegal:

This week, U.S. Immigration and Customs Enforcement (ICE) released new guidance on “facility visit and engagement protocol for Members of Congress and staff.”

“ICE detention locations and Field Offices are secure facilities. As such, all visitors are required to comply with [identity] verification and security screening requirements prior to entry,” it specified. “When planning to visit an ICE facility, ICE asks requests to be submitted at least 72 hours in advance.”

Incidentally, it’s perfectly legal for members of Congress to visit ICE detention facilities, even unannounced. And ICE’s attempt to circumvent that requirement threatens the constitutional system of checks and balances.

The Further Consolidated Appropriations Act of 2024, which funded the government through September 2024, specified that the Department of Homeland Security (DHS) may not “prevent…a Member of Congress” or one of their employees “from entering, for the purpose of conducting oversight, any facility operated by or for the Department of Homeland Security used to detain or otherwise house aliens” or to modify the facility in advance of such a visit. It also clarified that the DHS cannot “require a Member of Congress to provide prior notice of the intent to enter a facility.”

ICE’s new guidance tries to get around this by stipulating that “ICE Field Offices are not detention facilities and fall outside of the [law’s] requirements.” Nevertheless, it adds that “while Member[s] of Congress are not required to provide advance notice for visits to ICE detention facilities, ICE requires a minimum of 24-hours’ notice for visits by congressional staff” (emphasis in the original). Further, “visit request[s] are not considered actionable until receipt of the request is acknowledged” by ICE.

[…]

For ICE to claim an all-encompassing right to operate in the dark, apart from the prying eyes of even a co-equal branch of government, flies in the face of the Constitution’s clear meaning.

“This unlawful policy is a smokescreen to deny Member visits to ICE offices across the country, which are holding migrants – and sometimes even U.S. citizens – for days at a time. They are therefore detention facilities and are subject to oversight and inspection at any time,” Rep. Bennie Thompson (D–Miss.), the ranking member on the House Homeland Security Committee, said in a statement. “There is no valid or legal reason for denying Member access to ICE facilities and DHS’s ever-changing justifications prove this….If ICE has nothing to hide, DHS must make its facilities available.”

“These are not detention facilities, they’re just facilities where people are being detained” is both farcical on its face and the kind of intelligence-insulting self-refutation that might be good enough for John Roberts since a Republican administration is making it.

The post ICE announces unilateral amendment of legislative enactment to evade oversight by elected representatives appeared first on Lawyers, Guns & Money.

Hovertext:

I feel like I could at least respect if political leaders would knock down legal barriers with a folding chair, instead of just ignoring them.